Table of Links

-

Discussion and Broader Impact, Acknowledgements, and References

D. Differences with Glaze Finetuning

H. Existing Style Mimicry Protections

4 Robust Style Mimicry

We say that a style mimicry method is robust if it can emulate an artist’s style using only protected artwork. While methods for robust mimicry have already been proposed, we note a number of limitations in these methods and their evaluation in Section 4.1. We then propose our own methods (Section 4.3) and evaluation (Section 5) which address these limitations.

4.1 Limitations of Prior Robust Mimicry Methods and of Their Evaluations

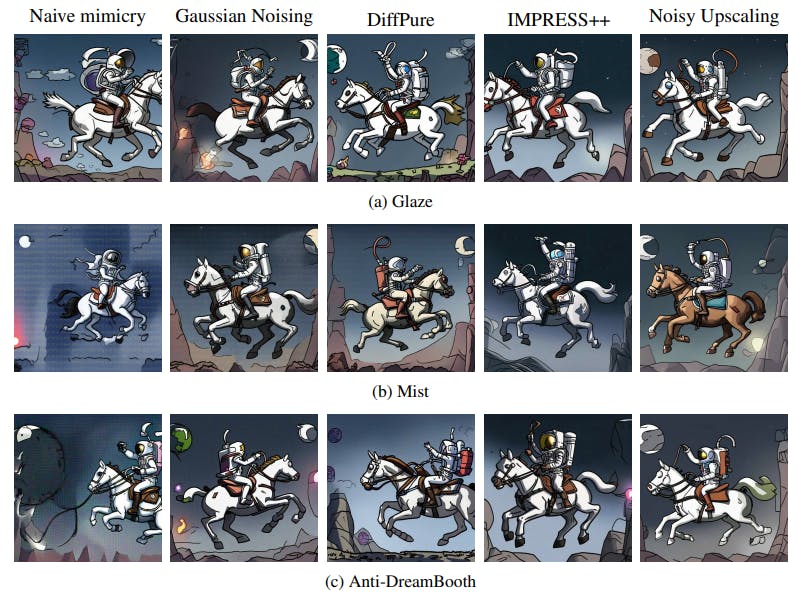

(1) Some mimicry protections do not generalize across finetuning setups. Most forgers are inherently ill-intentioned since they ignore artists’ genuine requests not to use their art for generative AI (Heikkila¨, 2022). A successful protection must thus resist circumvention attempts from a reasonably resourced forger who may try out a variety of tools. Yet, in preliminary experiments, we found that Glaze (Shan et al., 2023a) performed significantly worse than claimed in the original evaluation, even before actively attempting to circumvent it. After discussion with the authors of Glaze, we found small differences between our off-the-shelf finetuning script, and the one used in Glaze’s original evaluation (which the authors shared with us).[1] These minor differences in finetuning are sufficient to significantly degrade Glaze’s protections (see Figure 2 for qualitative examples). Since our off-the-shelf finetuning script was not designed to bypass style mimicry protections, these results already hint at the superficial and brittle protections that existing tools provide: artists have no control over the finetuning script or hyperparameters a forger would use, so protections must be robust across these choices.

(2) Existing robust mimicry attempts are sub-optimal. Prior evaluations of protections fail to reflect the capabilities of moderately resourceful forgers, who employ state-of-the-art methods (even off-the-shelf ones). For instance, Mist (Liang et al., 2023) evaluates against DiffPure purifications using an outdated and low-resolution purification model. Using DiffPure with a more recent model, we observe significant improvements. Glaze (Shan et al., 2023a) is not evaluated against any version of DiffPure, but claims protection against Compressed Upscaling, which first compresses an image with JPEG and then upscales it with a dedicated model. Yet, we will show that by simply swapping the JPEG compression with Gaussian noising, we create Noisy Upscaling as a variant that is highly successful at removing mimicry protections (see Figure 26 for a comparison between both methods).

(3) Existing evaluations are non-comprehensive. Comparing the robustness of prior protections is challenging because the original evaluations use different sets of artists, prompts, and finetuning setups. Moreover, some evaluations rely on automated metrics (e.g., CLIP similarity) which are unreliable for measuring style mimicry (Shan et al., 2023a,b). Due to the brittleness of protection methods and the subjectivity of mimicry assessments, we believe a unified evaluation is needed.

4.2 A Unified and Rigorous Evaluation of Robust Mimicry Methods

To address the limitations presented in Section 4.1, we introduce a unified evaluation protocol to reliably assess how existing protections fare against a variety of simple and natural robust mimicry methods. Our solutions to each of the numbered limitations above are: (1) The attacker uses a popular “off-the-shelf” finetuning script for the strongest open-source model that all protections claim to be effective for: Stable Diffusion 2.1. This finetuning script is chosen independently of any of these protections, and we treat it as a black-box. (2) We design four robust mimicry methods, described in Section 4.3. We prioritize simplicity and ease of use for low-expertise attackers by combining a variety of off-the-shelf tools. (3) We design and conduct a user study to evaluate each mimicry protection against each robust mimicry method on a common set of artists and prompts.

4.3 Our Robust Mimicry Methods

We now describe four robust mimicry methods that we designed to assess the robustness of protections. We primarily prioritize simple methods that only require preprocessing protected images. These methods present a higher risk because they are more accessible, do not require technical expertise, and can be used in black-box scenarios (e.g. if finetuning is provided as an API service). For completeness, we further propose one white-box method, inspired by IMPRESS (Cao et al., 2024).

We note that the methods we propose have been considered (at least in part) in prior work that found them to be ineffective against style mimicry protections (Shan et al., 2023a; Liang et al., 2023; Shan et al., 2023b). Yet, as we noted in Section 4.1, these evaluations suffered from a number of limitations. We thus re-evaluate these methods (or slight variants thereof) and will show that they are significantly more successful than previously claimed.

Black-box preprocessing methods.

✦ Gaussian noising. As a simple preprocessing step, we add small amounts of Gaussian noise to protected images. This approach can be used ahead of any black-box diffusion model.

✦ DiffPure. We use image-to-image models to remove perturbations introduced by the protections, also called DiffPure (Nie et al., 2022) (see Appendix I.1). This method is black-box, but requires two different models: the purifier, and the one used for style mimicry. We use Stable Diffusion XL as our purifier.

✦ Noisy Upscaling. We introduce a simple and effective variant of the two-stage upscaling purification considered in Glaze (Shan et al., 2023a). Their method first performs JPEG compression (to minimize perturbations) and then uses the Stable Diffusion Upscaler (Rombach et al., 2022) (to mitigate degradations in quality). Yet, we find that upscaling actually magnifies JPEG compression artifacts instead of removing them. To design a better purification method, we observe that the Upscaler is trained on images augmented with Gaussian noise. Therefore, we purify a protected image by first applying Gaussian noise and then applying the Upscaler. This Noisy Upscaling method introduces no perceptible artifacts and significantly reduces protections (see Figure 26 for an example and Appendix I.2 for details).

White-box methods.

✦ IMPRESS++. For completeness, we design a white-box method to assess whether more complex methods can further enhance the robustness of style mimicry. Our method builds on IMPRESS (Cao et al., 2024) but adopts a different loss function and further applies negative prompting (Miyake et al., 2023) and denoising to improve the robustness of the sampling procedure (see Appendix I.3 and Figure 27 for details).

Authors:

(1) Robert Honig, ETH Zurich ([email protected]);

(2) Javier Rando, ETH Zurich ([email protected]);

(3) Nicholas Carlini, Google DeepMind;

(4) Florian Tramer, ETH Zurich ([email protected]).

This paper is

[1] The two finetuning scripts mainly differ in the choice of library, model, and hyperparameters. We use a standard HuggingFace script and Stable Diffusion 2.1 (the model evaluated in the Glaze paper).