Table of Links

-

Discussion and Broader Impact, Acknowledgements, and References

D. Differences with Glaze Finetuning

H. Existing Style Mimicry Protections

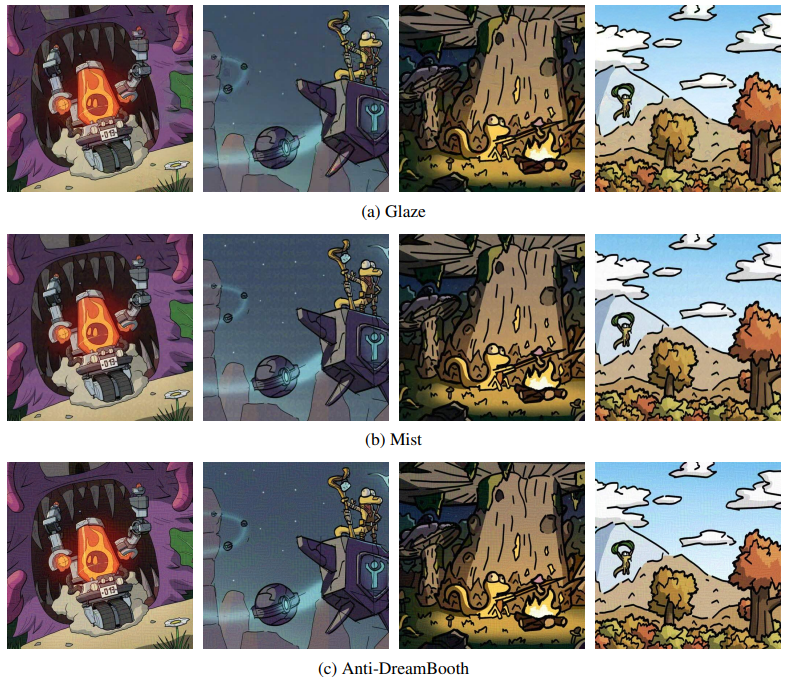

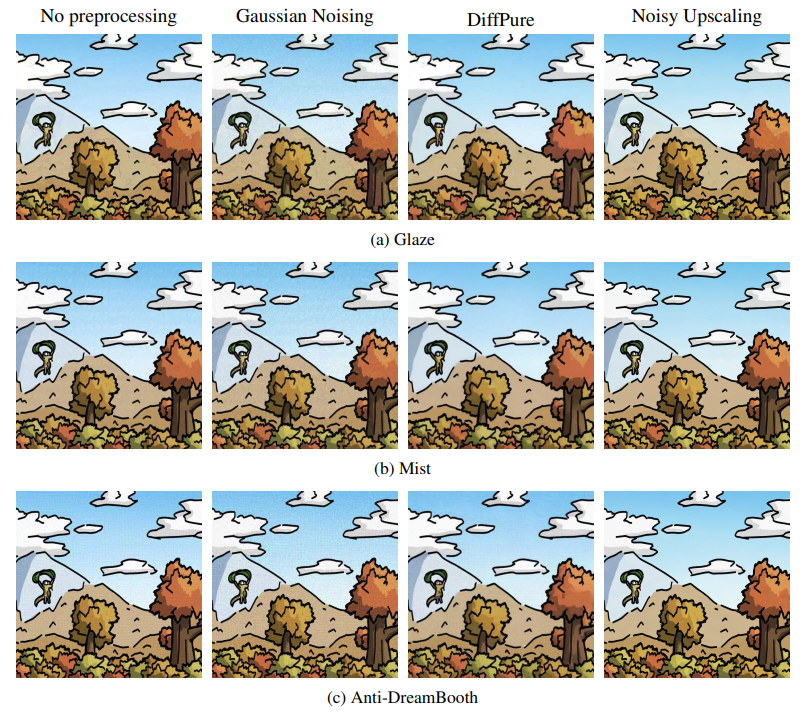

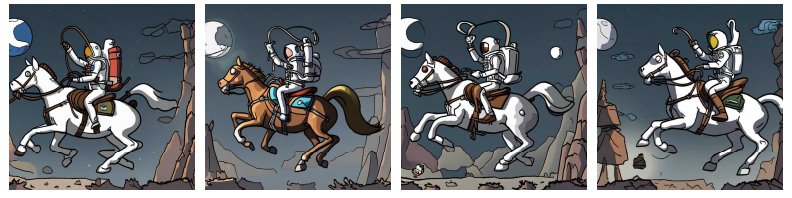

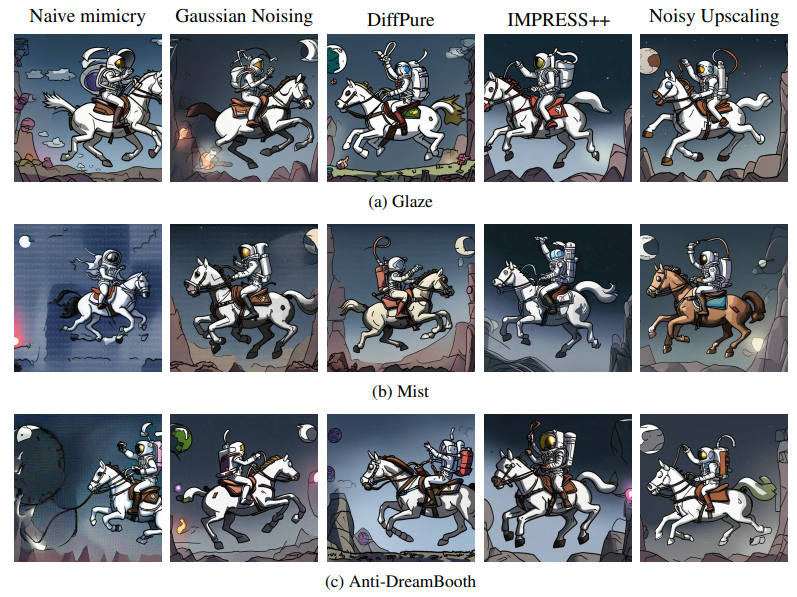

A Detailed Art Examples

This section illustrates how images look like at every stage of our work. We include (1) original artwork from a contemporary artist (@nulevoy)[5] as a reference in Figure 6, (2) the original artwork after applying each of the available protections in Figure 7, (3) one image after applying the cross product of all protections and preprocessing methods in Figure 8, (4) baseline generations from a model trained on unprotected art in Figure 9, and (5) robust mimicry generations for each scenario in Figure 10.

Authors:

(1) Robert Honig, ETH Zurich ([email protected]);

(2) Javier Rando, ETH Zurich ([email protected]);

(3) Nicholas Carlini, Google DeepMind;

(4) Florian Tramer, ETH Zurich ([email protected]).

This paper is

[5] The artist gave explicit permission for the use of their art