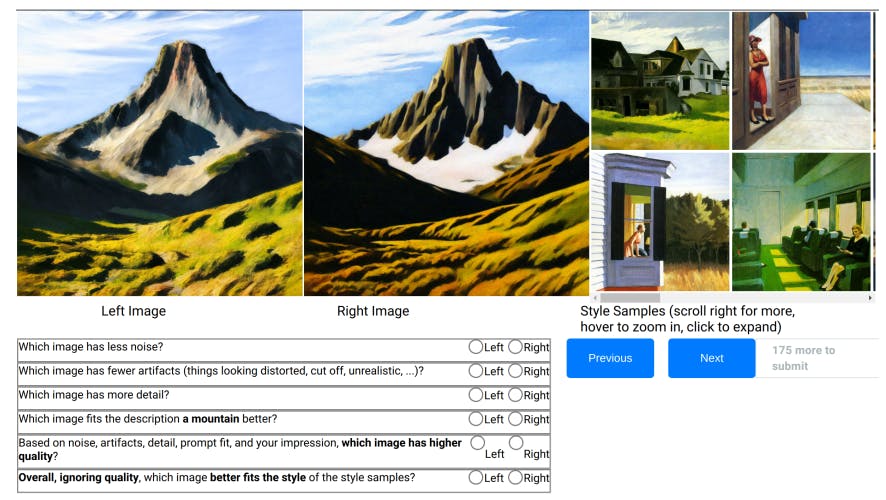

User Study for Evaluating Robust Style Mimicry

15 Dec 2024

Discover the design and methodology behind our user study evaluating robust style mimicry methods, including quality and style assessments using Amazon MTurk.

Compute Resources for Style Mimicry Experiments

15 Dec 2024

Review the compute resources used for our style mimicry experiments, detailing execution times and additional resource requirements for various methods.

How We Evaluated Different AI Techniques for Mimicking Artistic Styles

15 Dec 2024

Explore the experimental setup and hyperparameters used for evaluating robust style mimicry methods, including Stable Diffusion, Glaze, Anti-DreamBooth, & more.

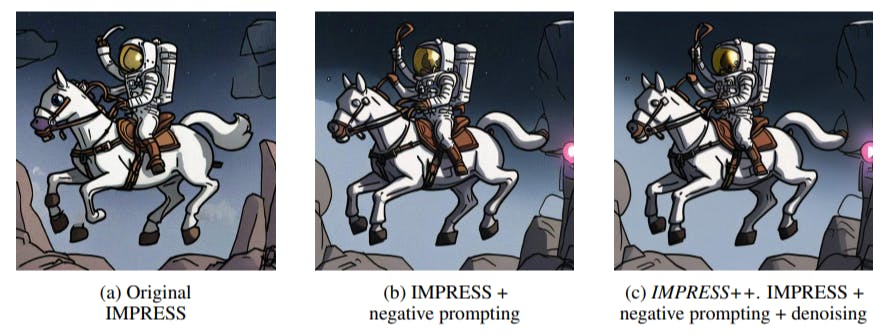

Why AI Art Protections Aren’t as Strong as They Seem

14 Dec 2024

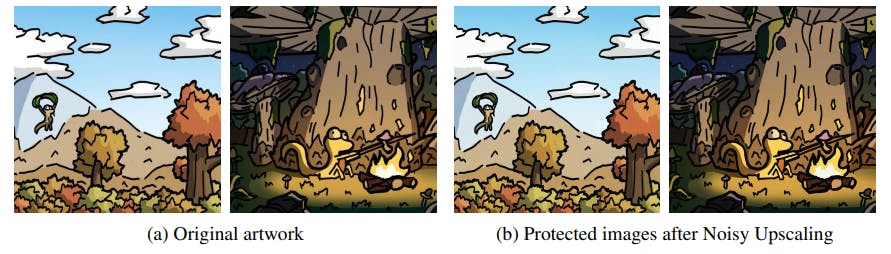

Explore how methods like DiffPure, Noisy Upscaling, and IMPRESS++ weaken style mimicry protections in AI-generated art with low-effort techniques.

How Are Artists Protecting Their Unique Styles from Imitation in AI Art?

14 Dec 2024

Learn about existing protections against style mimicry in AI art, including encoder and denoiser-based defenses like Glaze, Mist, and Anti-DreamBooth.

The Science Behind AI’s Ability to Recreate Art Styles

14 Dec 2024

Discover the most effective methods for style mimicry in AI art, from simple prompting to finetuning generative models, and how they replicate unknown styles

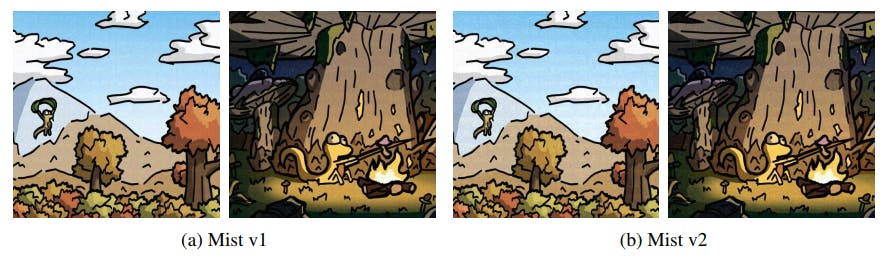

Mist v2 Fails to Defend Against Robust Mimicry Methods Like Noisy Upscaling

13 Dec 2024

Despite improvements in Mist v2, perturbations are still visible, & robust mimicry methods like Noisy Upscaling easily bypass protections, showing no progress

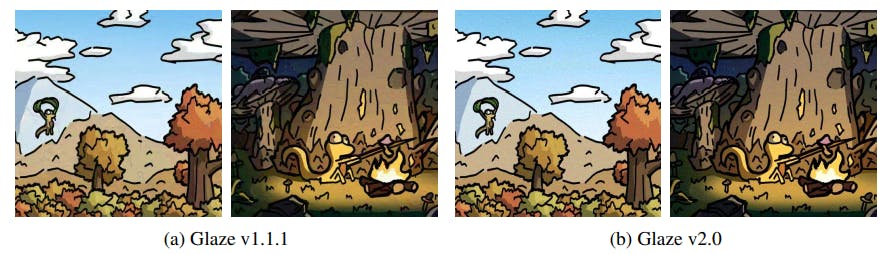

New Glaze 2.0 Tool Still Fails to Stop AI from Copying Artwork

13 Dec 2024

Despite Glaze 2.0’s claimed improvements, it fails to enhance protection against robust mimicry methods like Noisy Upscaling, with perturbations still removable

The Key to Better Art Style Mimicry

13 Dec 2024

Learn about the differences in finetuning setups between our approach and Glaze, highlighting improvements in baseline style mimicry using Stable Diffusion 2.1.